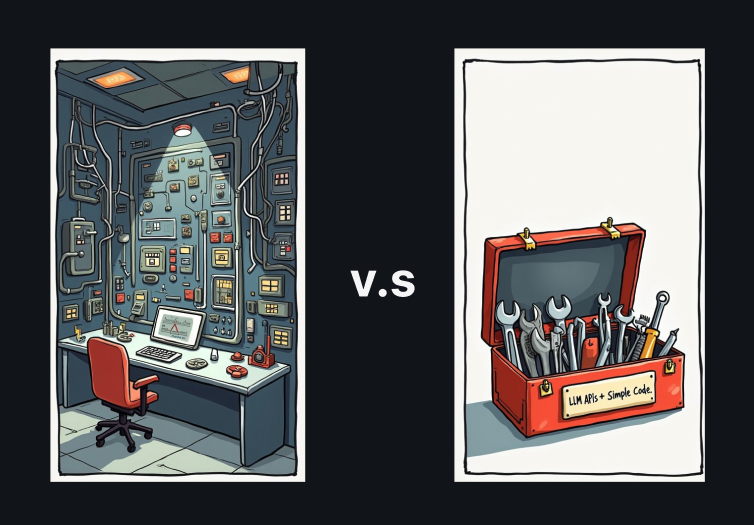

Do you need a framework to build out your AI Agents?

Or can you use basic tooling to get it done?

Published: July 15th 2025

Ok, so you have decided your going to build out an AI agent assistant.

Now you need to figure out how you are going to build it. You have heard of a lot of agent frameworks like

- LangGraph

- Amazon Bedrock's AI Agent framework

- CrewAI

- and Microsoft’s AutoGen

One of the questions you are bound to come up with is

“Which LLM/Agent framework do I use to build this out”

A lot of times the answer could well be “None”.

Based on Anthropic’s work with dozens of teams building LLM agents across industries,

“Consistently, the most successful implementations use simple, composable patterns, rather than complex frameworks”

Dexter Horthy, Founder of HumanLayer and author of “12 Factor Agents”, says

“I've talked to a lot of really strong founders, in and out of YC, who are all building really impressive things with AI. Most of them are rolling the stack themselves. I don't see a lot of frameworks in production customer-facing agents.”

I’ve tried both approaches myself as well, and tend to agree with going for the simple approach to get started.

What does that mean though to not use frameworks?

One of the core features of agentic systems is tool calling. The ability to decide what tool to call, and then be able to run it. The LLM APIs of the large providers such as Anthropic and OpenAI, support tool calling directly. Many of the features the frameworks provide can just instead be implemented directly in a few lines of code.

In fact, a lot of the larger frameworks, once you dig in and see how they work underneath, are just using these same tool calling abilities on the LLM APIs.

The extra layers of abstraction these frameworks can make it → harder to understand how your application is working → harder to debug → and harder to customize

And they can get more unwieldy to work with as the application increases in size and complexity.

On the plus side, they do make it easier to get started by simplifying standard low level tasks like calling LLMs, tool calling and memory storage for the LLMs. And they might well provide some good tooling to make it easier to carry out certain tasks.

I would say, keep it simple to get started. See how far you can go using the basic LLM APIs.

If you start to hit bottlenecks, it could be a good time to explore some of the larger frameworks and see if they can help you at all.