I've been hearing a lot about "context engineering" recently

It's our newer and better understanding on "prompt engineering"

Published: July 21st 2025

I finally got a chance to look into it and it's pretty interesting

It's our newer and better understanding on "prompt engineering". Static prompts are not what we need. We need dynamic context fed into the LLMs so that they can carry out their task well.

What people are saying

"I really like the term 'context engineering' over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM."

Tobi Lobi, Shopify CEO

"+1 for 'context engineering' over 'prompt engineering'"

Andrej Karpathy, OpenAI Founding Member, Ex-Director of AI @ Tesla

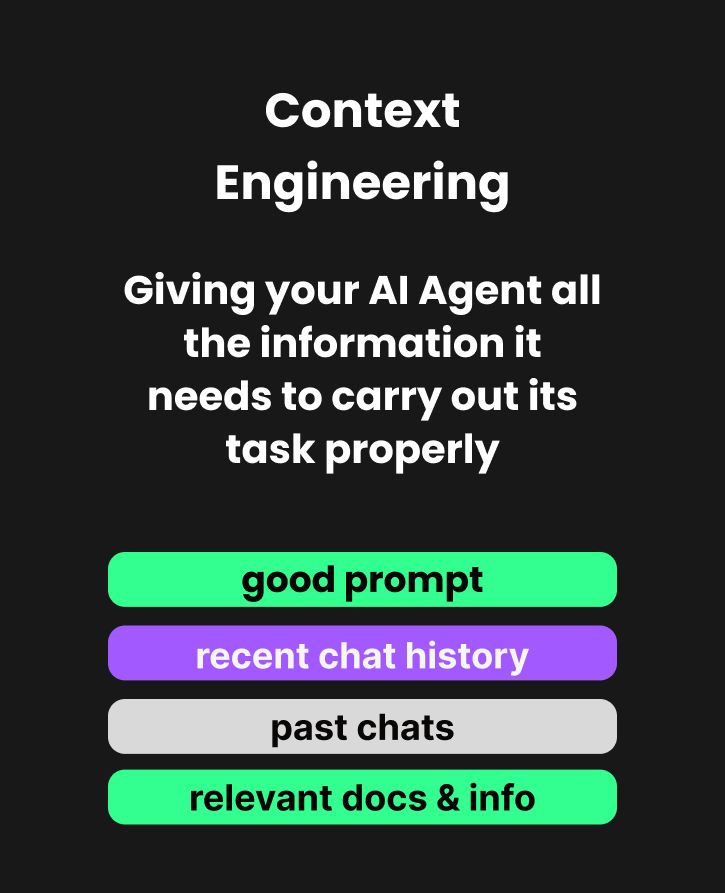

So what is Context Engineering?

Whereas prompt engineering was more about crafting the perfect static instructions to an LLM, context engineering is about dynamically giving the LLM the most relevant information for the current problem the LLM is trying to solve, to maximise its chances of success.

This context includes:

→ The initial instructions or system prompt

→ The current question the user has

→ The recent chat history between the user and LLM, as well as recent agent state (short term memory)

→ Previous conversations (long term history)

→ Information from relevant documents (RAG), including documents, pdfs and web searches

→ A list of tools that could be useful for the current query

Giving the LLM all this information and context about the problem gives it the best chance at coming up with good results.

Because in most cases, Agents and LLMs fail not due to their reasoning capabilities, but due to us not providing them enough information and context about the problem we are asking them to solve.

A simple example of that - a calendar booking agent

Without knowledge (context) about your current calendar schedule, the AI agent will not be able to book appointments for you properly, no matter how powerful the LLM is. It won't know when you are free and busy.

Give it that information though, and it will be able to find the right slots just fine.

That's what context engineering is all about. Giving the right info to the LLM for the problem at hand